Constructing a NEP dipole model¶

Introduction¶

In this tutorial, we will show you how to use the GPUMD package to train a NEP dipole model and analyze the results. For theoretical background on the NEP approach, please read the documentation. A paper that introduces the NEP dipole model systematically is in preparation.

Prerequisites¶

You need to have access to an NVIDIA GPU with compute capability >= 3.5. Besides it, CUDA Toolkit 9.0 or higher is needed to compile the GPUMD package. The latest GPUMD (we recommend v3.7 or newer) can be downloaded here. Unpack it, go to the src directory, and run the command make -j to compile. If success, you should get two executables: gpumd and nep in the src directory. The flag “-std=c++14” in makefile can be modified

to “-std=c++11” if you failed the compilation process.

Train a NEP dipole model for QM7B database¶

To train a NEP dipole model, two files: train.xyz and nep.in are mandatory. The train.xyz file contains necessary training data, and the nep.in file contains some parameters used for training. Optionally, one can also provide a test.xyz file that has similar contents as thetrain.xyz for validation.

Prepare the train.xyz and test.xyz files¶

The

train.xyz/test.xyzfile should be prepared according to the documentation.In this tutorial,

train.xyzandtest.xyzfiles are already prepared in the current folder, which contain 5768 and 1443 structures, respectively. Structures as well as dipole data in thetrain.xyzandtest.xyzare taken from the QM7B database that contains 7211 organic compounds.The dipole data are embedded in the comment lines oftrain.xyz/test.xyz, organized as dipole=“:math:`mu_{x}` :math:`mu_{y}` :math:`mu_{z}`” per structure. The dipole data were computed at the CCSD/d-aug-cc-pVDZ level of theory by Yang and collaborators. Of course, you can use any quantum mechanics package and any theoretical method such as the DFT to calculate the total dipole moment. However, structures in QM7B database are without lattice information, which is mandatory in the training of NEP models. Therefore, a cubic box with a dimension of 100 \(\rm \mathring{A}\) was set for every structure. We recommend the ASE package to manipulate thetrain.xyz/test.xyzfile.

Prepare the nep.in file.¶

First, read the documentation regarding input paramaters carefully. The most important parameter is

model_type, which controls the training types.model_type=0, which is the default, stands for a training task of PES;model_type=1, a training task of dipole;model_type=2, a training task of polarizability. Here, we setmodel_type=1. A minimalnep.infile reads:

type 6 H C N O S Cl

model_type 1

The parameter type is also important, which specified the element kinds in the system. The first value 6 is the number of elements in train.xyz/test.xyz, followed by the detailed element names, in this case, H C N O S Cl. The order will be frozen in the model after training. We have prepared the nep.in file in the current folder, which reads:

type 6 H C N O S Cl

model_type 1

cutoff 6 4

n_max 6 6

basis_size 10 10

l_max 4 2 1

neuron 10

population 80

batch 10000

generation 200000

lambda_1 0.001

lambda_2 0.001

The values of parameters cutoff, n_max,basis_size,l_max, neuron,population used here were tested elaborately, which achieved a good balance between the accuracy and costs. The batch size depends on your GPU device. On a GeForce RTX 3090 with 24 GB memory, you can train the model with all data at the same time, therefore, we set batch a value greater than 5768. The lambda_1 and lambda_1 are the regularization

paramaters. You can take the default values trustingly just ignore these two parameters. The GPUMD will adjust these two parameters dynamically according to the real-time loss. Alternatively, you can set lambda_1 and lambda_2 a fixed value which should be of the same order as the target quantities upon convergence. In this example, we set lambda_1=lambda_2=0.001.We

will look back on it after analyzing the results.

Run the nep executable¶

Run the following command in the working directory

path/to/nep # if you use linux

path\to\nep # if you use Windows

It took about 22 hours to run 200,000 generations using a GeForce RTX 3090. One can kill the task at any time and also restart it just by running the nep again.

Check the training results¶

After you launch the

neptask, there should be some output on the screen. We encourage the user to read the screen output carefully. It can help to understand the working flow ofnep.Some files will be generated in the current folder and will be updated every 100 generations (some will be updated every 1000 generations). Therefore, one can check the results even before finishing the maximum number of generations as set in

nep.in.If the user has not read the documentation regarding the output files, it is time to read about those here.

We will use Python to visualize the results in some of the output files next. We first load

pylabthat we need.

[1]:

from pylab import *

Checking the loss.out file¶

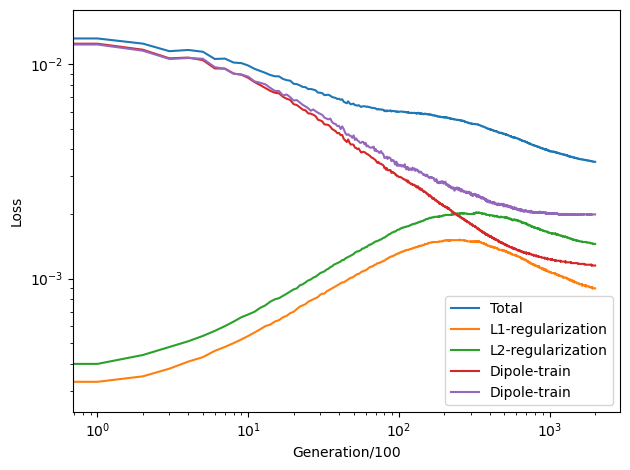

We see that the \(\mathcal{L}_1\) and \(\mathcal{L}_2\) regularization loss functions first increases and then decreases, which indicates the effectiveness of the regularization.

The dipole loss is the root mean square error (RMSE) of dipole per atom, which converges to about 0.001-0.003 a.u./atom.

[11]:

loss = loadtxt('loss.out')

loglog(loss[:, 1:4])

loglog(loss[:, 4:6])

xlabel('Generation/100')

ylabel('Loss')

legend(['Total', 'L1-regularization', 'L2-regularization', 'Dipole-train', 'Dipole-train'])

tight_layout()

The loss terms ‘Dipole-train’ and ‘Dipole-train’ converged after 20,000 generations. That’s why we choose the paramaters lambda_1=lambda_2=0.001. But you can take the default values trustingly.

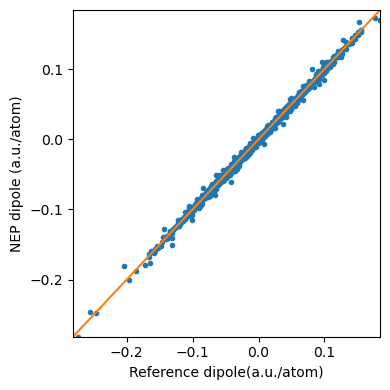

Checking the dipole_test.out file¶

The dipole_test.out is organized as “\(\mu_{x}\) \(\mu_{y}\) \(\mu_{z}\) \(\mu_{x}\) \(\mu_{y}\) \(\mu_{z}\)”, one line per structure. The first three values are predicted by NEP, while the last three values are the references we provide in test.xyz(but divided by the number of atoms).

[2]:

dipole_test = loadtxt('dipole_test.out')

ref_values = np.hstack([dipole_test[:,3],dipole_test[:,4],dipole_test[:,5]])

nep_values = np.hstack([dipole_test[:,0],dipole_test[:,1],dipole_test[:,2]])

plot(ref_values,nep_values , '.')

plot(linspace(dipole_test.min(),dipole_test.max()), linspace(dipole_test.min(),dipole_test.max()), '-')

xlim((dipole_test.min(),dipole_test.max()))

ylim((dipole_test.min(),dipole_test.max()))

xlabel('Reference dipole(a.u./atom)')

ylabel('NEP dipole (a.u./atom)')

fig = plt.gcf()

fig.set_size_inches(4,4)

tight_layout()

Get molecular dipole moment¶

Numbers of atoms are varied for different structures in the QM7B database. To get their molecular dipole moment, we should know their numbers of atoms. The following Python script can be used to obtain the molecular dipole moment for each structure in test.xyz.

[4]:

import os

file_size = os.path.getsize("test.xyz")

noa_list = []

with open("test.xyz") as reader:

while (reader.tell() < file_size):

noa = int(reader.readline())

noa_list.append(noa)

for i in range(noa+1):

reader.readline()

atomic_dipole_test = loadtxt('dipole_test.out')

molecular_dipole = dipole_test * np.tile(np.array(noa_list).reshape(-1,1),(1,6))

print(molecular_dipole)

[[-1.0092135 -0.4079592 -0.2488834 -1.0072993 -0.4016998 -0.2416006 ]

[ 0.16071358 0.14740241 -0.2966058 0.13049999 0.14509993 -0.2988005 ]

[-0.2512226 0.6660957 0.01369258 -0.2492999 0.6617998 0.02760001]

...

[ 0.06124566 1.1426184 -0.1661898 0.06629994 1.1297006 -0.1766996 ]

[-0.5868126 1.0639849 -0.00530762 -0.5317004 1.0760002 -0.0058 ]

[ 0.5859885 -0.02764035 -1.081206 0.5616 -0.02710005 -1.0708005 ]]

Prediction¶

You can switch the workding mode from training to prediction by adding an extra parameter

prediction 1innep.in, and provide atrain.xyz. In this mode, the dipole data is not necessary intrain.xyz. TheNEPwill use-1e+06as the reference values indipole_train.out. We also provide an example in theprediction_new_moleculedirectory.

Restart¶

After each 100 steps, the

nep.restartfile will be updated.If

nep.restartexists, it means you want to continue the previous training. Remember not to change the parameters related to the descriptor and the number of neurons. Otherwise the restarting behavior is undefined.If you want to train from scratch, you need to delete

nep.restartfirst (better to first make a copy of all the results from the previous training).

Author¶

Nan Xu

[ ]: